Django is a fantastic framework, not the least because it includes everything needed to quickly create web apps. But the developers should not be the only ones benefiting from this. The app should also be fast for users.

The official documentation has a chapter about performance and optimization with good advice. In this article I want to build on that and show tools and methods I've used in the past to reduce page load time.

Measure & collect data

Performance benchmarking and profiling are essential to any optimization work. Blindly applying optimizations could add complexity to the code base and maybe even make things worse.

We need performance data to know which parts to focus on and to validate that any changes have the desired effect.

django-debug-toolbar

The django-debug-toolbar is easy to use and has a nice interface. It can show you how much time is spent on each SQL query, a quick button to get EXPLAIN output for that query and a few other interesting details.

The template-profiler is an extra panel that adds profiling data about the template rendering process.

There are however a few drawbacks with the django-debug-toolbar. Because of how it integrates into the site it only makes sense to use in a development environment where DEBUG = True.

It also comes with a huge performance penalty itself.

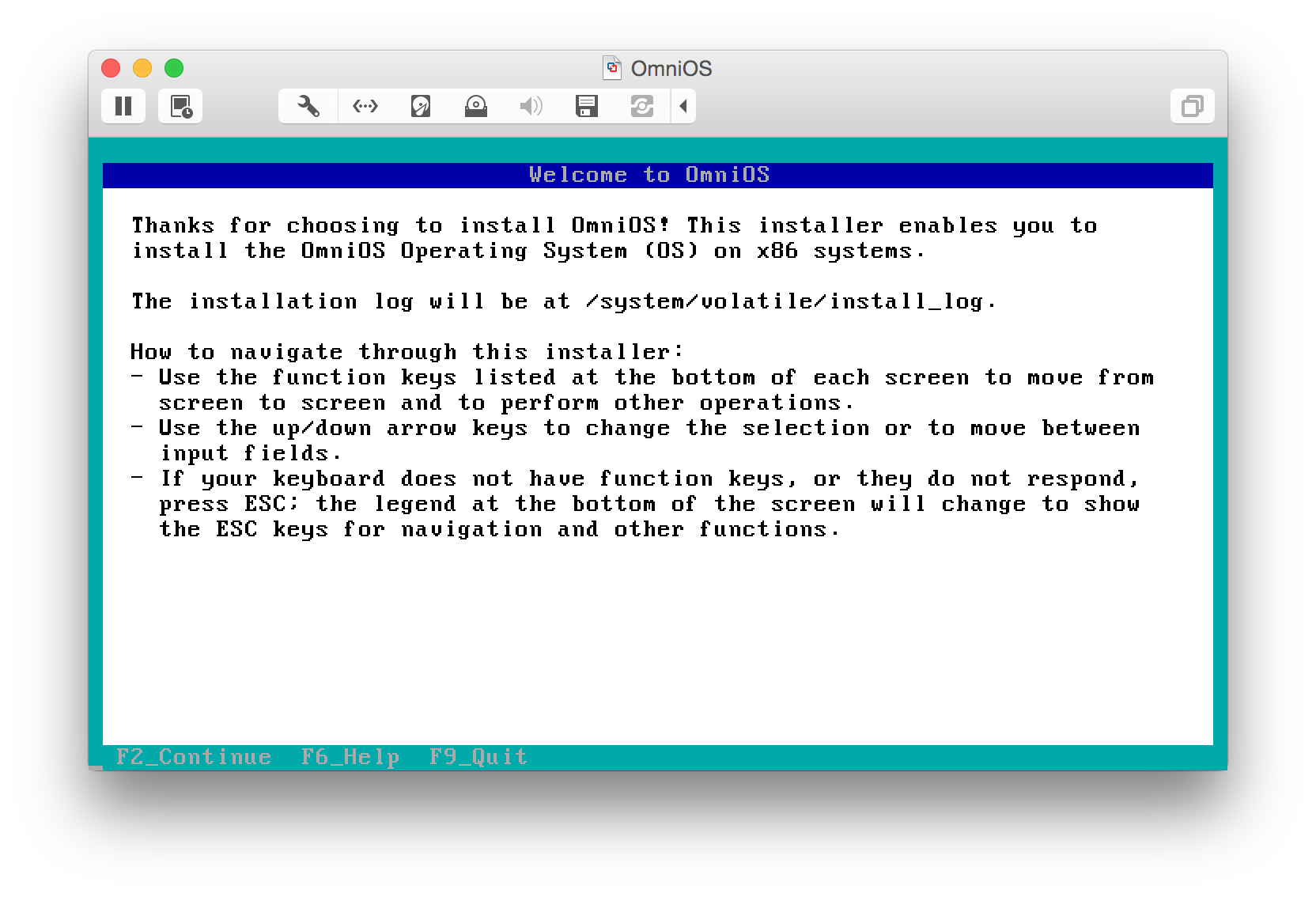

DTrace

DTrace doesn't have these limitations. It can be used on production services and give many details way beyond just the python part of the project. You can look deep into the database, python interpreter, webserver and operating system to get a complete picture where the time is spent.

Instead of the pretty browser UI this will happen in the CLI. DTrace scripts are written in a AWK-like syntax. There is also a collection of useful scripts in the dtracetools package. When using the Joyent pkgsrc repos this can be installed with

pkgin install dtracetools

One of the useful scripts in this package is the dtrace-mysql_query_monitor.d which will show all MySQL queries:

Who Database Query QC Time(ms)

kumquat@localhost kumquat set autocommit=0 N 0

kumquat@localhost kumquat set autocommit=1 N 0

kumquat@localhost kumquat SELECT `django_session`.`session_key`, `django_session`.`session_data`, `django_session`.`expire_date` FROM `django_session` WHERE (`django_session`.`session_key` = 'w4ty3oznpqesvoieh64me1pvdfwjhr2k' AND `django_session`.`expire_date` > '2019-11-18 13:04: N 0

kumquat@localhost kumquat SELECT `auth_user`.`id`, `auth_user`.`password`, `auth_user`.`last_login`, `auth_user`.`is_superuser`, `auth_user`.`username`, `auth_user`.`first_name`, `auth_user`.`last_name`, `auth_user`.`email`, `auth_user`.`is_staff`, `auth_user`.`is_active`, `auth_u Y 0

kumquat@localhost kumquat SELECT `cron_cronjob`.`id`, `cron_cronjob`.`when`, `cron_cronjob`.`command` FROM `cron_cronjob` Y 0

...

To do something similar for PostgreSQL:

dtrace -n '

#pragma D option quiet

#pragma D option switchrate=10hz

#pragma D option strsize=2048

dtrace:::BEGIN {

printf("%-9s %-80s\n", "Time(ms)", "Query");

}

postgres*::query-start {

start = timestamp;

}

postgres*::query-done {

printf("%-9d %-80s\n\n", ((timestamp - start) / 1000 / 1000), copyinstr(arg0));

}

'

Which will look like this:

Time(ms) Query

7 SELECT "auth_user"."id", "auth_user"."password", "auth_user"."last_login", "auth_user"."is_superuser", "auth_user"."username", "auth_user"."first_name", "auth_user"."last_name", "auth_user"."email", "auth_user"."is_staff", "auth_user"."is_active", "auth_user"."date_joined" FROM "auth_user" WHERE "auth_user"."username" = 'wiedi'

...

To look into the python process itself there are a few very useful dtrace-py_* scripts in the dtracetools package. For example dtrace-py_cputime.d will show the number of calls to a function as well as the inclusive and exclusive CPU time:

Count,

FILE TYPE NAME COUNT

...

base.py func render 1431

sre_parse.py func get 1607

base.py func render_annotated 1621

functional.py func <genexpr> 1768

base.py func resolve 1888

sre_parse.py func __getitem__ 2011

sre_parse.py func __next 2104

related.py func <genexpr> 2324

__init__.py func <genexpr> 3974

regex_helper.py func next_char 9033

- total - 113741

Exclusive function on-CPU times (us),

FILE TYPE NAME TOTAL

...

base.py func _resolve_lookup 22070

base.py func resolve 22810

base.py func render 22997

related.py func foreign_related_fields 23543

functional.py func wrapper 25928

defaulttags.py func render 26218

base.py func __init__ 33303

sre_parse.py func _parse 42869

regex_helper.py func next_char 44579

regex_helper.py func normalize 71313

- total - 1809937

Inclusive function on-CPU times (us),

FILE TYPE NAME TOTAL

...

wsgi.py func __call__ 1790427

sync.py func handle_request 1804334

sync.py func handle 1806034

sync.py func accept 1806870

loader_tags.py func render 2452085

base.py func _render 2886611

base.py func render_annotated 4563513

base.py func render 6018042

deprecation.py func __call__ 12147994

exception.py func inner 13873367

In this case we see there is a bit of time spent on regex things, probably related to the URL routing.

cProfile

The standard python library comes with cProfile which will collect precise timings of function calls. Together with the django test client this can be used to automate performance testing.

Automating the performance data collection step as much as possible allows for quick iterations. For a recent project I created a dedicated manage.py command to profile the most important URLs. It looked similar to this:

from django.core.management.base import BaseCommand

from django.test import Client

from django.contrib.auth.models import User

import io

import pstats

import cProfile

def profile_url(url):

c = Client()

c.force_login(User.objects.first())

pr = cProfile.Profile()

pr.enable()

r = c.get(url, follow = True)

pr.disable()

assert r.status_code == 200

s = io.StringIO()

pstats.Stats(pr, stream = s).sort_stats('cumulative').print_stats(35)

class Command(BaseCommand):

help = 'run profiling functions'

def handle(self, *args, **options):

profile_url("/")

profile_url("/contacts/")

profile_url("/events/")

profile_url("/search/?q=info&type=all")

Instead of just printing the statistics they can also be saved to disk with pr.dump_stats(fn). This allows further processing with flameprof to create FlameGraphs.

%timeit

Another handy utility from the standard library is timeit. You'll often find examples like this:

$ python -m timeit '"-".join(str(n) for n in range(100))'

10000 loops, best of 5: 30.2 usec per loop

This is useful when experimenting with small statements.

To take this one step further I recommend you install IPython which will transform the Django manage.py shell into a very powerful development environment.

Besides tab-completion and a thousand other features you'll have the %timeit magic.

In [1]: from app.models import *

In [2]: e = Events.objects.first()

In [3]: %timeit e.some_model_method()

703 ns ± 7.05 ns per loop (mean ± std. dev. of 7 runs, 1000000 loops each)

Optimize

Once you know which parts of your project are the slowest you can start working on improving those parts. Usually the most time is spent on database queries followed by template rendering.

Although every project might need different optimizations there are some common patterns.

Prefetch related objects

When you display a list of objects in your template and access some field of a related object this will trigger an additional database query. This can easily add up and result in huge numbers of queries just for one request.

When you know which related fields you will need you can tell Django to get these in a more efficient way. The two important methods are select_related() and prefetch_related().

While select_related() works by using a SQL JOIN prefetch_related() creates one query for each lookup. These are easy to use, require nearly no modifications to existing code and can result in huge improvements.

Indexes

Another easy to apply performance tweak is to make sure you have the right database indexes.

Whenever you use a field to filter and in some cases for order_by you should consider if you need an index. Creating an index is as easy as adding db_index = True to your model field, then creating and running the resulting migration. Be sure to validate the improvement with SQL EXPLAIN.

Cache

Caching is a huge topic and there are many ways to improve django performance with caching. Depending on the environment and performance characteristics the place, duration and layer where a cache is used will be different.

The django cache framework is an easy way to leverage Memcached at various layers. The @cached_property decorator is often helpful for fat model methods.

Precalculate

Some calculations just take too long for the usual time-budget of a HTTP request.

In these cases I've found it useful to precalculate the needed data in a background process.

This can be done with a task queue like Celery or with a little less complexity by just having a manage.py command that is either long running as a service or called as a cronjob.

Beyond Django

Beyond these common cases there are many further ways to optimize web projects. Changing the database schema by denormalizing might improve some queries. Other techniques will depend heavily on the circumstances of the project.

There are usually also plenty of opportunities to optimize upstack as well as below. Measure performance data from the Browser and the time spent inside Django will only become a smaller part. With that new data you can start work on DOM rendering, CSS and JS, reducing request size for images or better network routing.

Also looking at lower levels can have huge benefits. Even small improvements at a lower level can result in performance gains simply because these parts are run so often.

A recent example was a ~120ms per request gain by changing how the python interpreter was compiled. The cpython version tested had the retpoline mitigation enabled. This was an isolated internal service where the threat model did not require this.

So just by compiling without -mindirect-branch=thunk-inline -mfunction-return=thunk-inline -mindirect-branch-register resulted in a large performance boost.

If you have a web project in need of some performance optimization feel free to reach out!